Big Data

"Big data" is a concept that encompasses a variety of technologies. Simply put, it means a collection of data that can not be crawled, managed, and processed by a regular software tool for a certain period of time. IBM defines the "big data" concept as four V, namely, Volume, Variety, Velocity, and Value.

The current "big data" refers not only to the size of the data itself, but also to the collection of data tools, platforms and data analysis system. The purpose of large data research and development is to develop large data technology and apply it to related fields, through the solution of huge data processing problems to promote its breakthrough development.

Large data technology refers to the rapid acquisition of valuable information from a wide variety of types of data. The core of solving big data problems is large data technology. The current "big data" refers not only to the size of the data itself, but also to the collection of data tools, platforms and data analysis system. The purpose of large data research and development is to develop large data technology and apply it to related fields, through the solution of huge data processing problems to promote its breakthrough development. Therefore, the challenges brought about by the era of large data not only in how to deal with huge amounts of data from which to obtain valuable information, but also reflected in how to strengthen large data technology research and development, to seize the forefront of the development of the times.

To understand the concept of large data, first of all from the "big" to start, "big" refers to the size of the data, large data generally refers to the 10TB (1TB = 1024GB) scale above the amount of data. The large data is different from the massive data in the past. The basic characteristics can be summed up by four Vs (Vol-ume, Variety, Value and Veloc-ity), ie, large volume, diversity, low value density and fast speed.

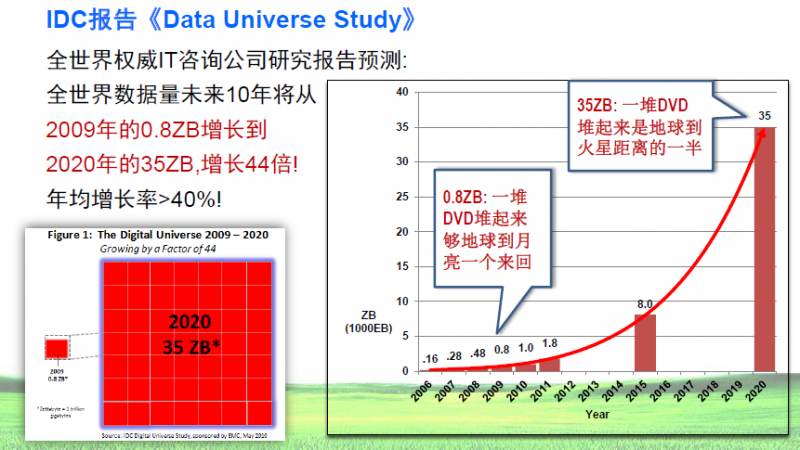

Data volume is huge. Jump from the TB level to the PB level.

Data types, such as the above-mentioned network log, video, pictures, location information, and so on.

Low value density. To video, for example, continuous uninterrupted monitoring process, may be useful data only one or two seconds.

Processing speed. 1 second law. Finally, this is also the traditional data mining technology is essentially different. Internet, mobile, internet, cell phone, tablet, PC, and a wide variety of sensors all over the globe, all of which are either data sources or bearer.

The large data technology describes a new generation of technologies and architectures that are used to extract value from a variety of very large data in a very economical way, with high-speed capture, discovery and analysis techniques, and that the rapidly growing data in the future is urgently needed Seek new processing techniques.

In the "Bigdata" era, through the Internet, social networking, Internet of Things, people can timely and comprehensive access to large information. At the same time, the change and evolution of the form of information itself, but also makes the information carrier as far more than the speed of people imagine the rapid expansion of the imagination.

The arrival of the cloud era makes the main body of data creation gradually shift from the enterprise to the individual, and the vast majority of the data produced by the individual is unstructured data such as pictures, documents and videos. The popularity of information technology makes the enterprise more office processes through the network can be achieved, the resulting data is also based on unstructured data. It is expected that by 2012, unstructured data will reach more than 75% of the total amount of data on the Internet. The "big data" used to extract wisdom is often the result of these unstructured data. Traditional data warehouse systems, BI, link mining applications such as data processing time requirements are often hours or days as a unit. But the "big data" application highlights the real-time nature of data processing. Online personalized recommendation, stock transaction processing, real-time traffic information and other data processing time requirements in minutes or even seconds.